This article first appeared on OneStream Software blog page by Rob Poirier

Organizations everywhere rely on data to make informed decisions and improve the bottom line through data management. But with the vast amount of data today, things can quickly get out of control and spawn data gremlins (i.e., little pockets of disconnected, ungoverned data) that wreak havoc on the organization.

Remember those adorable creatures that transformed into destructive, mischievous creatures when fed after midnight in the 1984 comedy horror film Gremlins? (see Figure 1)

In the same way, data gremlins – aka “technical debt” – can arise if your systems are not flexible to enable finance to deliver. Effectively managing data to prevent data gremlins from wreaking havoc is crucial in any modern organization – and that requires first understanding the rise of data gremlins.

Do You Have Data Gremlins?

How do data gremlins arise, and how do they proliferate so rapidly? The early beginnings started with Excel. People in Finance and operations would get big stacks of green bar reports (see Figure 2). Don’t remember those? They looked like the image below and were filed and stacked in big rooms.

When you needed data, you pulled the report, re-typed the data into Excel, added some formatting and calculations, printed the spreadsheet and dropped it in your boss’s inbox. Your boss would then review and make suggestions and additions till confident (a relative term here!) that sharing the spreadsheet with upper management would be useful, and voila, a gremlin is born.

And gremlins are bad for the organization.

The Rise of Data Gremlins

Data gremlins are not a new phenomenon but one that can severely impact the organization, leading to wasted time and resources, lost revenue, and a damaged reputation. For that reason, having a robust data management strategy is essential to prevent data gremlins from causing havoc.

As systems and integration got more sophisticated and general ledgers became a reliable book of record, data gremlins should have faded out of existence. But did they? Nope, not even a little. In fact, data gremlins grew faster than ever partly due to the rise of the most popular button on any report, anywhere at the time – you guessed it – the “Export to .CSV” button. Creating new gremlins became even easier and faster, and management started habitually asking for increasingly more analysis that could easily be created in spreadsheets (see Figure 3). To match the demand in 2006, Microsoft increased the number of rows of data a single sheet could have to a million rows. A million! And people cheered!

However, errors were buried in those million-row spreadsheets, not in just some spreadsheets, but in almost ALL of them. The spreadsheets had no overarching governance and could not be automatically checked in any way. As a result, those errors would live for months and years. Any consultant who experienced those spreadsheets will confirm stories of people adding “+1,000,000” to a formula as a last-minute adjustment and then forgetting to remove the addition later. Major companies reported incorrect numbers to the street, and people lost jobs over such errors.

As time passed, the tools got more sophisticated – from MS Excel and MS Access to departmental planning solutions such as Anaplan, Essbase, Vena Solutions, Workday Adaptive and others. Yet none stand up to the level of reliability IT is tasked with achieving. The controls and audits are nothing compared to what enterprise resource planning (ERP) solutions provide. So why do such tools continue to proliferate? Who feeds them after midnight, so to speak? The real reason is that these departmental planning systems are like a hammer. Every time a new model or need for analysis crops up, it’s “Let’s build another cube.” Even if that particular data structure is not the best answer, Finance has a hammer, and they are going to pound something with it. And why should they do their diligence to understand the proliferation of Gremlins? (see Figure 4)

The answer suggested by the mega ERP vendors is to stop doing that. End users don’t really need that data, that level of granularity or that flexibility. They need to learn to simplify and not worry about trivial things. For example, end users don’t need visibility into what happens in a legal consolidation or a sophisticated forecast. “Just trust us. We will do it,” ERP vendors say.

Here’s the problem: Finance is tasked with delivering the right data at the right time with the right analysis. What is the result of just “trusting” ERP vendors? Even more data gremlins. More manual reconciliations. Less security and control over the most critical data for the company. In other words, an organization can easily spend $30M on a secure, well-designed ERP system that doesn’t fix the gremlin problem – emphasizing why organizations must control the data management process.

The Importance of Controlling Data Management and Reducing Technical Debt

Effective data management enables businesses to make informed decisions based on accurate and reliable data. But controlling data management is what prevents data gremlins and reduces technical debt. Data quality issues are perpetuated by the gremlins and can arise when proper data management practices aren’t in place. These issues can include incomplete, inconsistent or inaccurate data, leading to incorrect conclusions, poor decision-making and wasted resources.

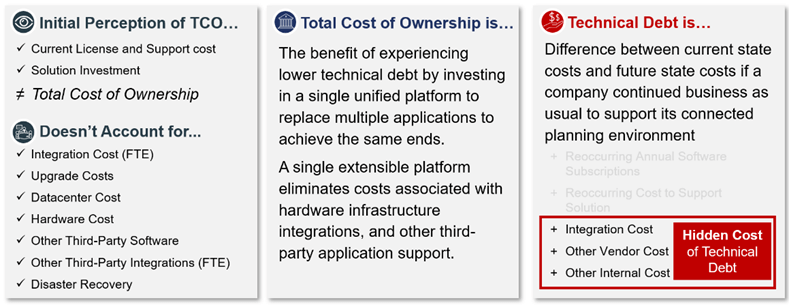

One of the biggest oversights when dealing with data gremlins is only focusing on Return on Investment (ROI) and dismissing fully burdened technical debt (see Figure 5). Many finance teams use performance measurements like Total Cost of Ownership (TCO) and ROI to qualify that the solution is good for the organization, but rarely does the organization dive deeper – beyond the performance measurements to include opportunities to reduce implementation and maintenance waste. Unfortunately, this view of performance measurements does not account for hidden complexities and costs associated with the negative impacts of data gremlin growth.

Technical Debt is MORE than the Total Cost of Ownership

Unfortunately, data gremlins can occur at any stage of the data management process, from data collection to analysis and reporting. These gremlins can be caused by various factors, such as human error, system glitches or even malicious activity. For example, a data gremlin could be a missing or incorrect field in a database, resulting in inaccurate calculations or reporting.

Want proof? Just think about the long, slow process you and your team engage in when tracking down information between fragmented sources and tools rather than analyzing results and helping your business partners act. Does this drawn-out process sound familiar?

The good news is that another option exists. Unifying these multiple processes and tools can provide more automation, remove the complexities of the past and meet the diverse requirements of even the most complex organization, both today and well into the future. The key is building a flexible yet governed environment that allows problems and new analyses to be created within the framework – no Gremlins.

Sunlight on the Data Gremlins

At OneStream, we’ve lived and managed this complicated relationship our entire careers. We even made gremlins back when the answer for everything was “build another spreadsheet.” But we’ve eliminated the gremlins in spreadsheets only to replace them with departmental apps or cubes that are just bigger, nastier gremlins. Our battle scars have taught us that gremlins, while easy to use and manage, are not the answer. Instead, they proliferate and cause newer and bigger hard-to-solve problems of endless data reconciliation.

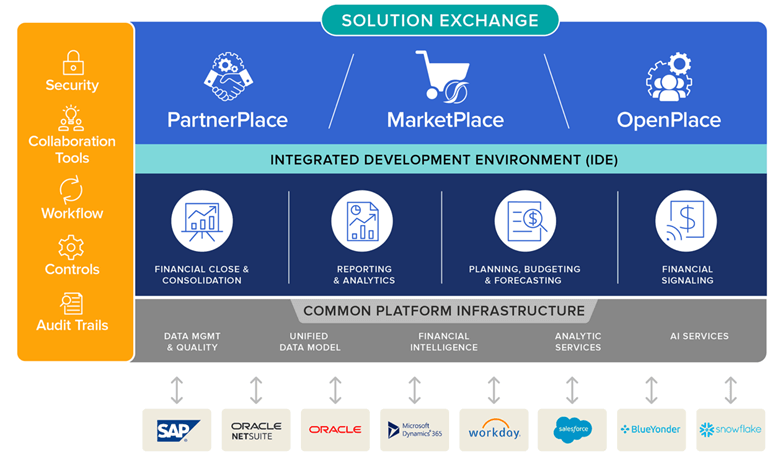

For that reason, OneStream was designed and built from the ground up to eliminate gremlins, including the need for them in the future (see Figure 6). OneStream combines all the security, governance and audit needed to ensure accurate data – and does it all in one place without the need to walk off-prem while allowing the flexibility to be leveraged inside the centrally defined framework. In OneStream, organizations can leverage Extensible Dimensionality to provide value to end users to “do their thing” without having to push the “Export to .CSV” button.

OneStream is also a platform in the truest sense of the word. The platform provides direct data integration to source data, drill back to that data, and flexible, easy-to-use reporting and dashboarding tools.

That allows IT to eliminate the non-value add cost of authoring reports AND the gremlins – all in one fell swoop.

Finally, our platform is the only EPM platform allowing organizations to develop their functionality directly on the platform. That’s correct – OneStream is a full development platform where organizations can leverage all the platform resources of integration and reporting needed for organizations to deliver their own Intellectual Property (IP). They can even encrypt the IP in the platform or share it with others.

OneStream, in other words, allows organizations to manage their data effectively. In our e-book on financial data quality management, we shared the top 3 goals for effective financial data quality management with CPM:

- Simplified data integration – Direct integration to any open GL/ERP system empowers users to drill back and drill through to source data.

- Improved data integrity – Powerful pre-data and post-data loading validations and confirmations that ensure the right data is available at every step in the process.

- Increased transparency – 100% transparency and audit trails for data, metadata and process change visibility from report to the source.

Conclusion

Data gremlins can disrupt business operations and lead to severe business implications, making it essential for organizations to control their data management processes before data gremlins emerge. By developing a data management strategy, investing in robust data management systems, conducting regular data audits, training employees on data management best practices and having a disaster recovery plan, organizations can prevent data-related issues and ensure business continuity.

Learn More

To learn more about how organizations are moving on from their data gremlins, download our whitepaper titled “Unify Connected Planning or Face the Hidden Cost.”